How to reduce the memory requirement for a GPU pytorch training process? (finally solved by using multiple GPUs) - vision - PyTorch Forums

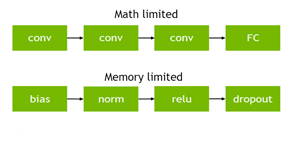

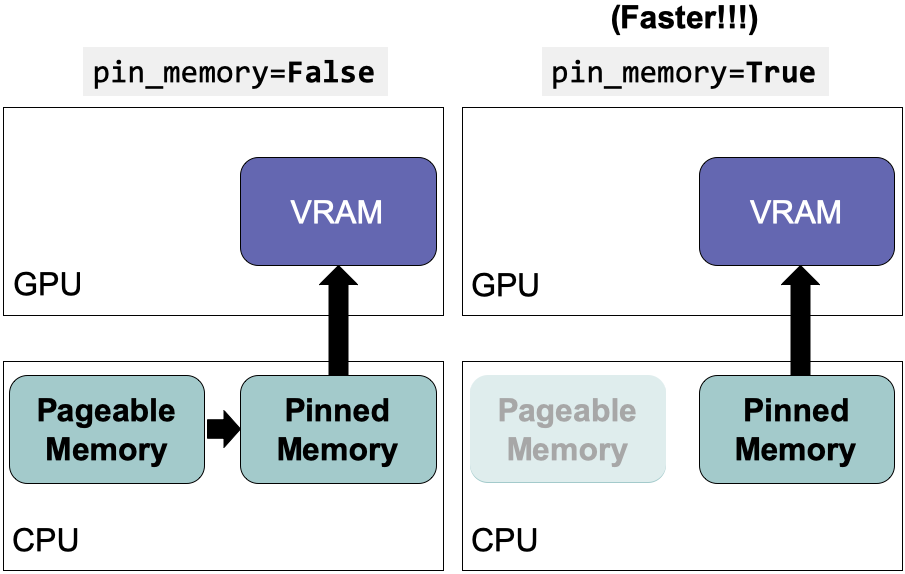

Optimize PyTorch Performance for Speed and Memory Efficiency (2022) | by Jack Chih-Hsu Lin | Apr, 2022 | Towards Data Science

Optimize PyTorch Performance for Speed and Memory Efficiency (2022) | by Jack Chih-Hsu Lin | Apr, 2022 | Towards Data Science

RuntimeError: CUDA out of memory. Tried to allocate 9.54 GiB (GPU 0; 14.73 GiB total capacity; 5.34 GiB already allocated; 8.45 GiB free; 5.35 GiB reserved in total by PyTorch) - Course Project - Jovian Community

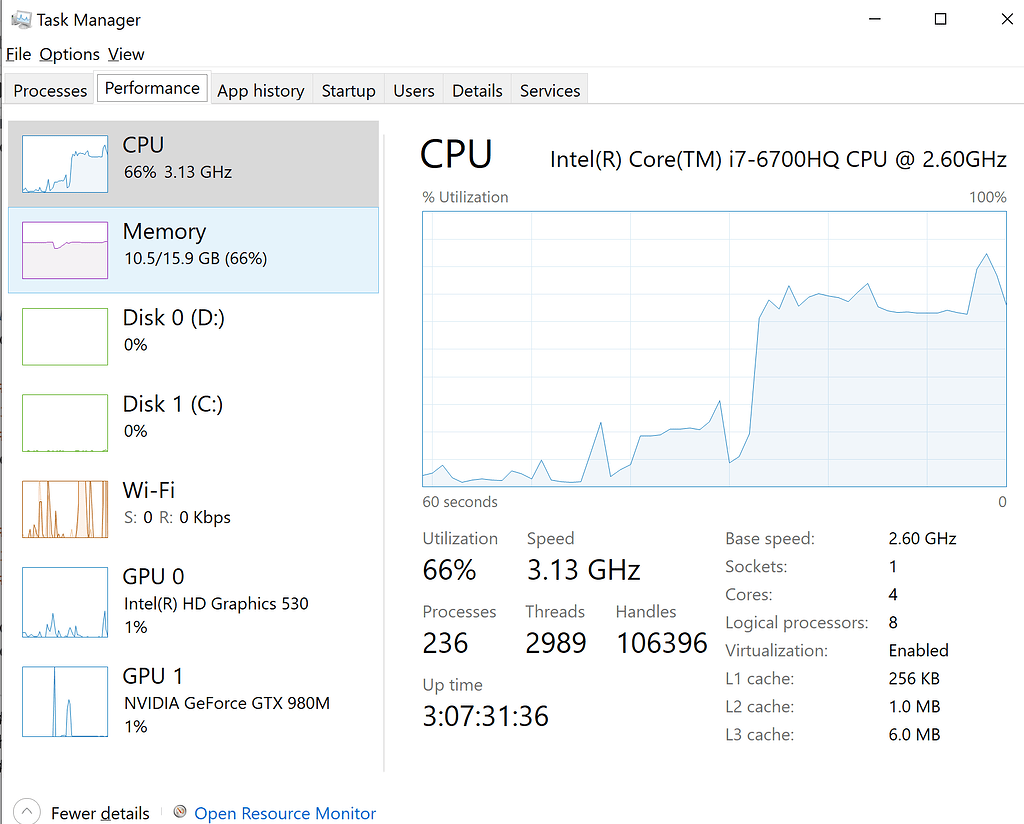

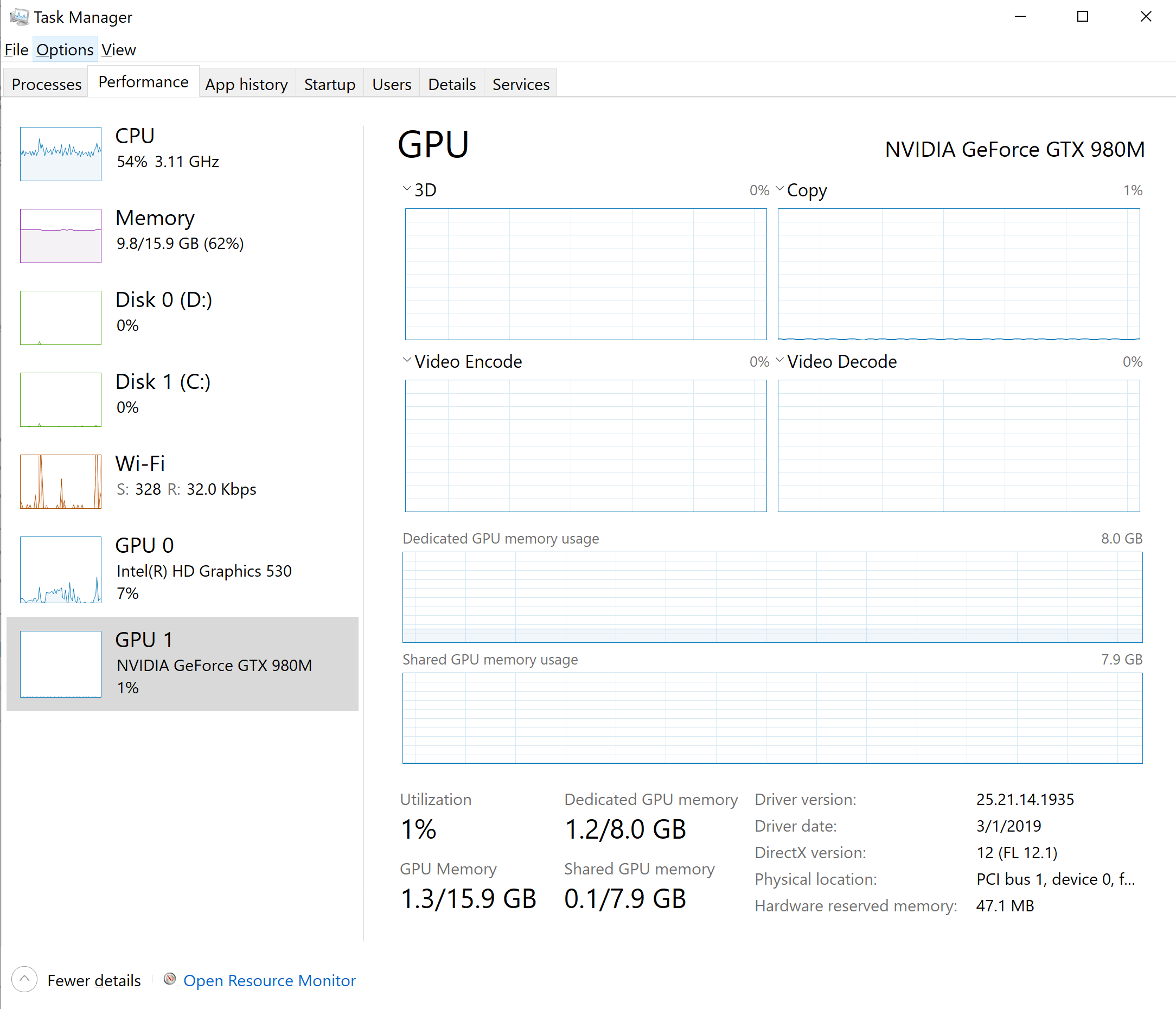

python - How can I decrease Dedicated GPU memory usage and use Shared GPU memory for CUDA and Pytorch - Stack Overflow

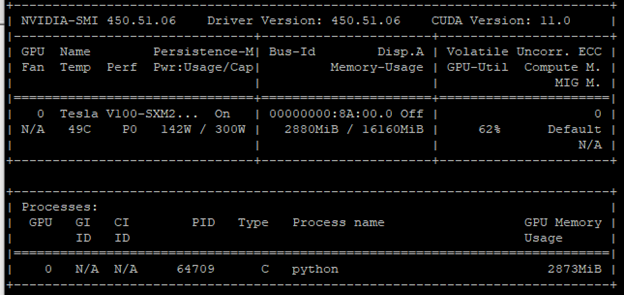

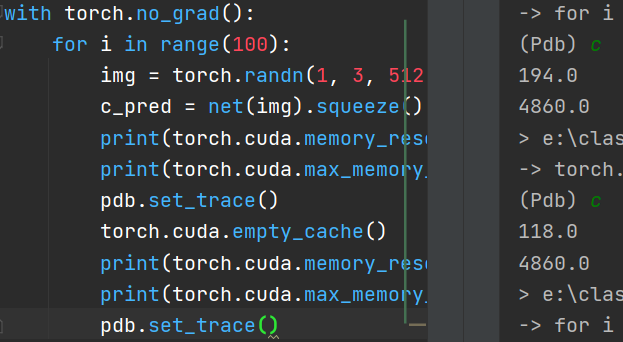

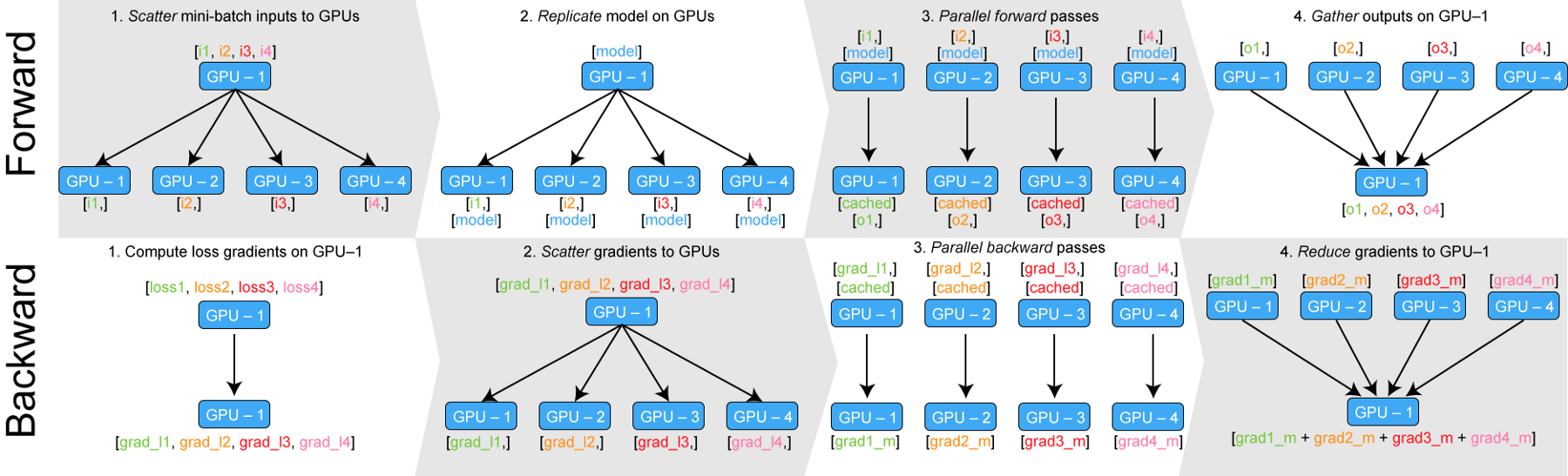

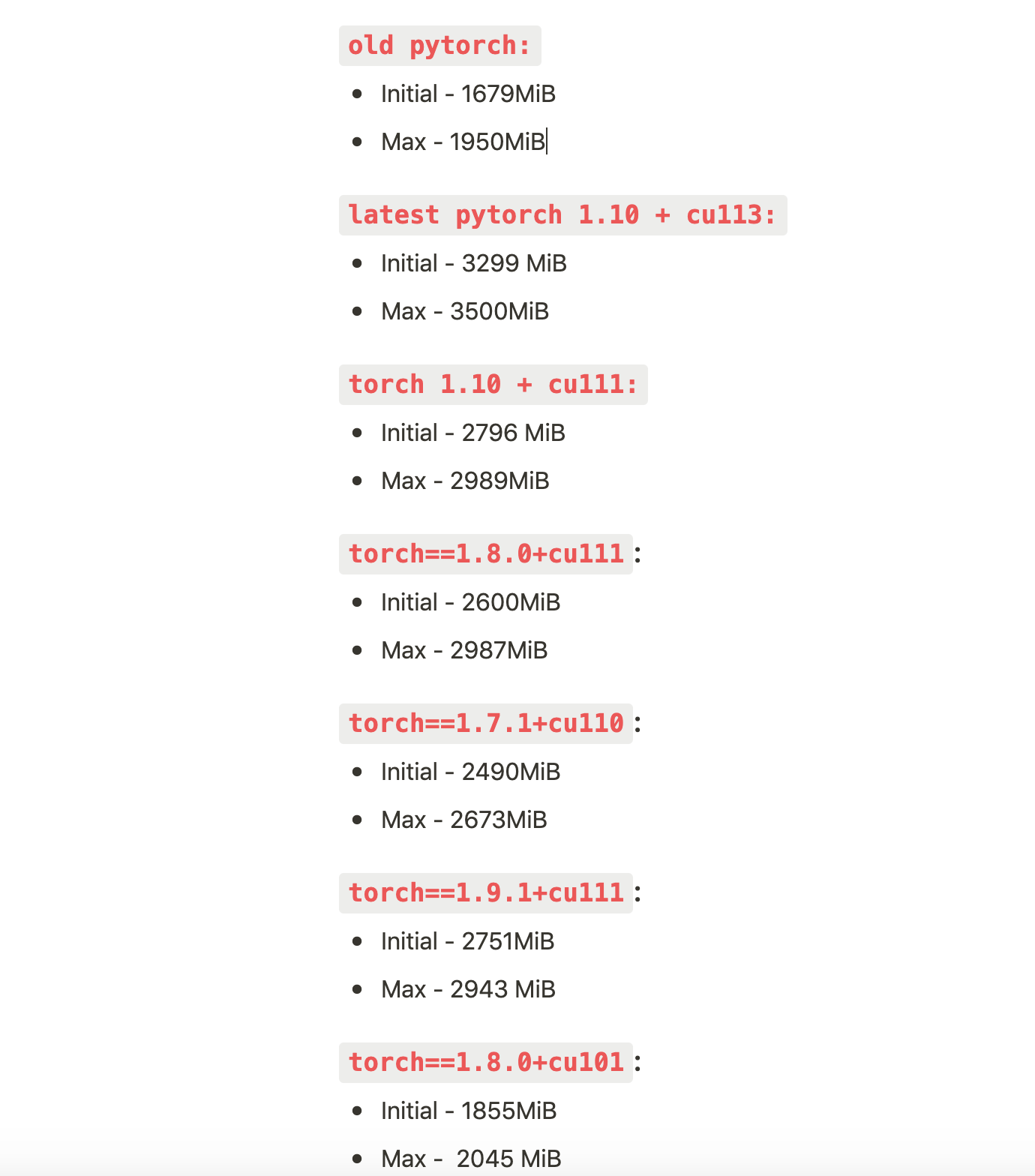

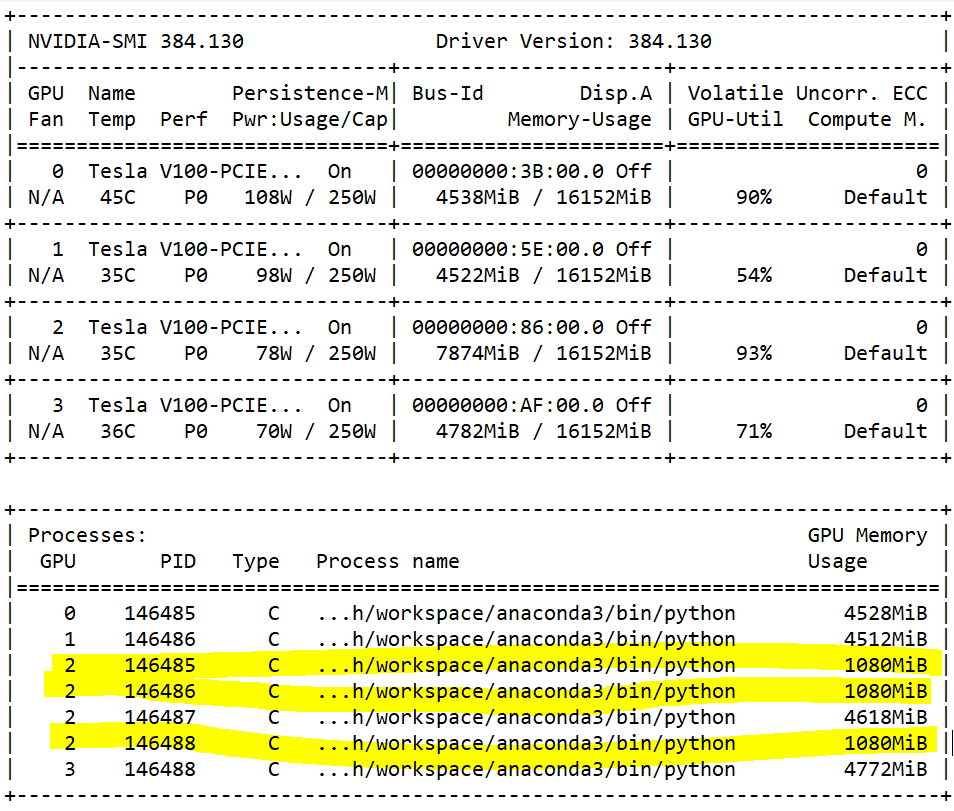

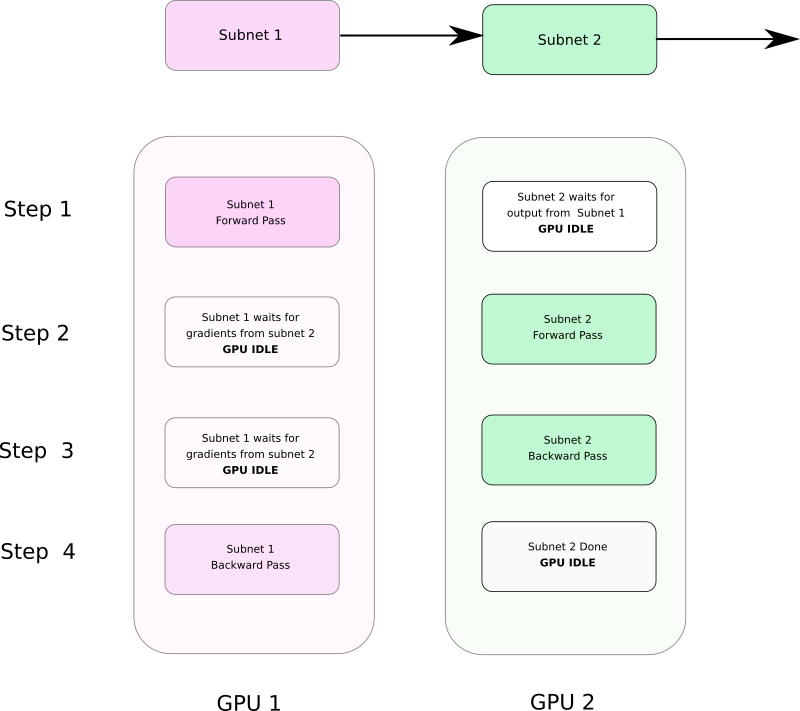

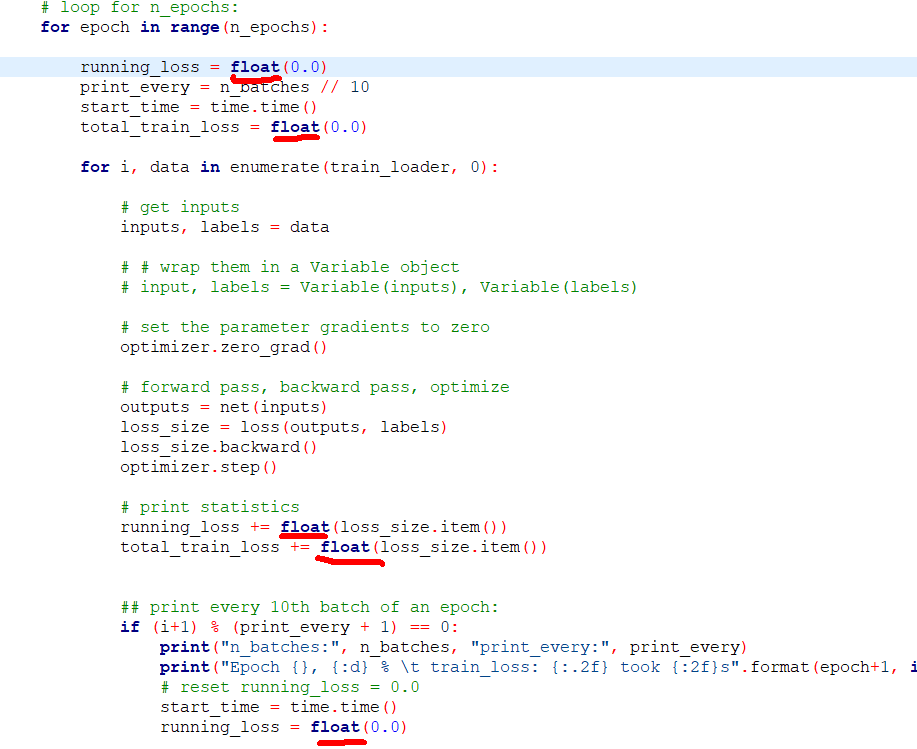

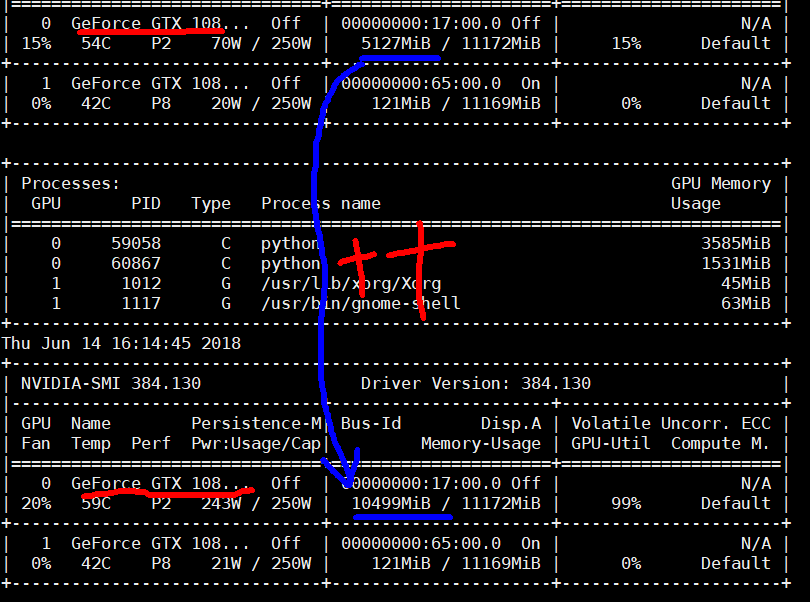

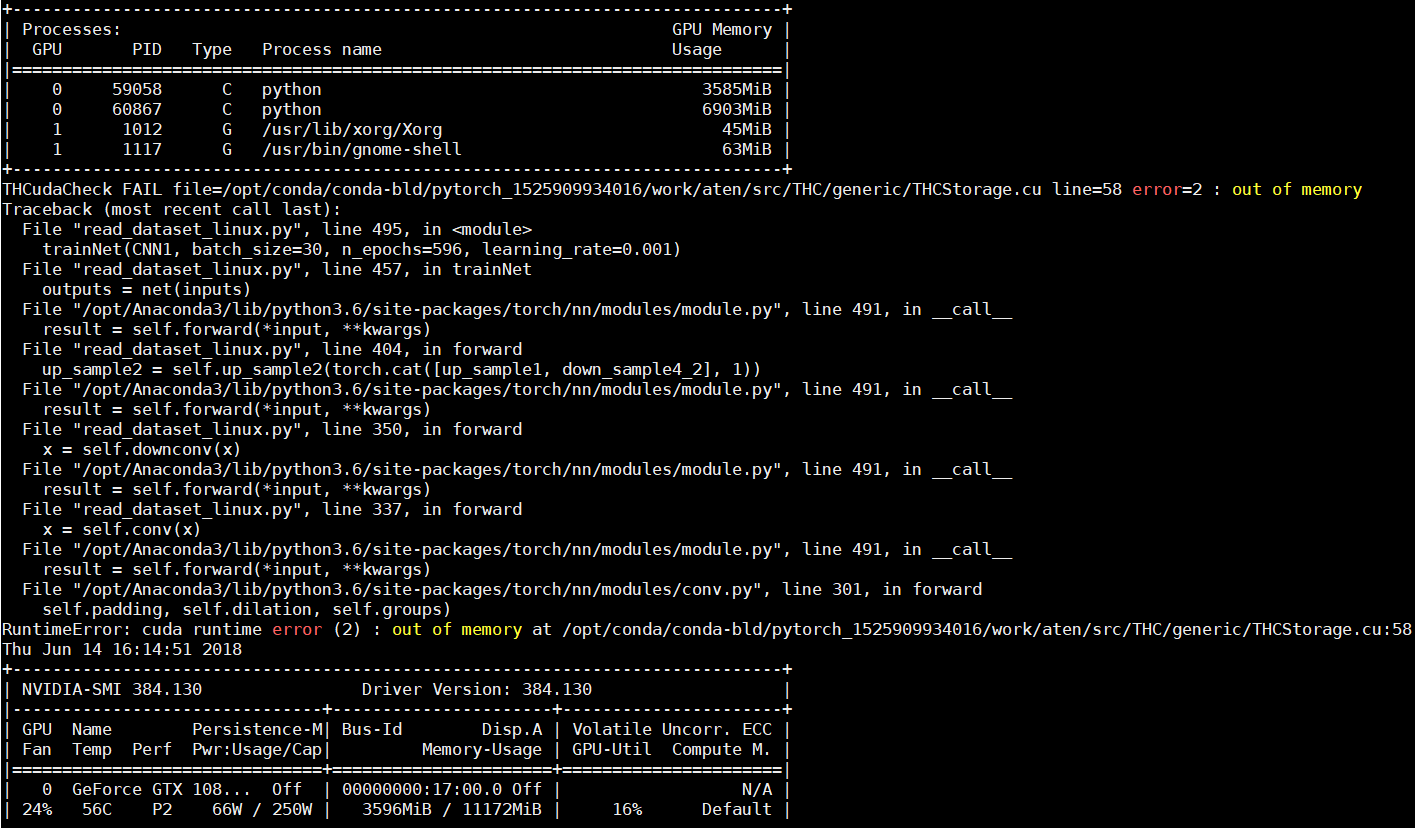

How to reduce the memory requirement for a GPU pytorch training process? (finally solved by using multiple GPUs) - vision - PyTorch Forums

How to reduce the memory requirement for a GPU pytorch training process? (finally solved by using multiple GPUs) - vision - PyTorch Forums